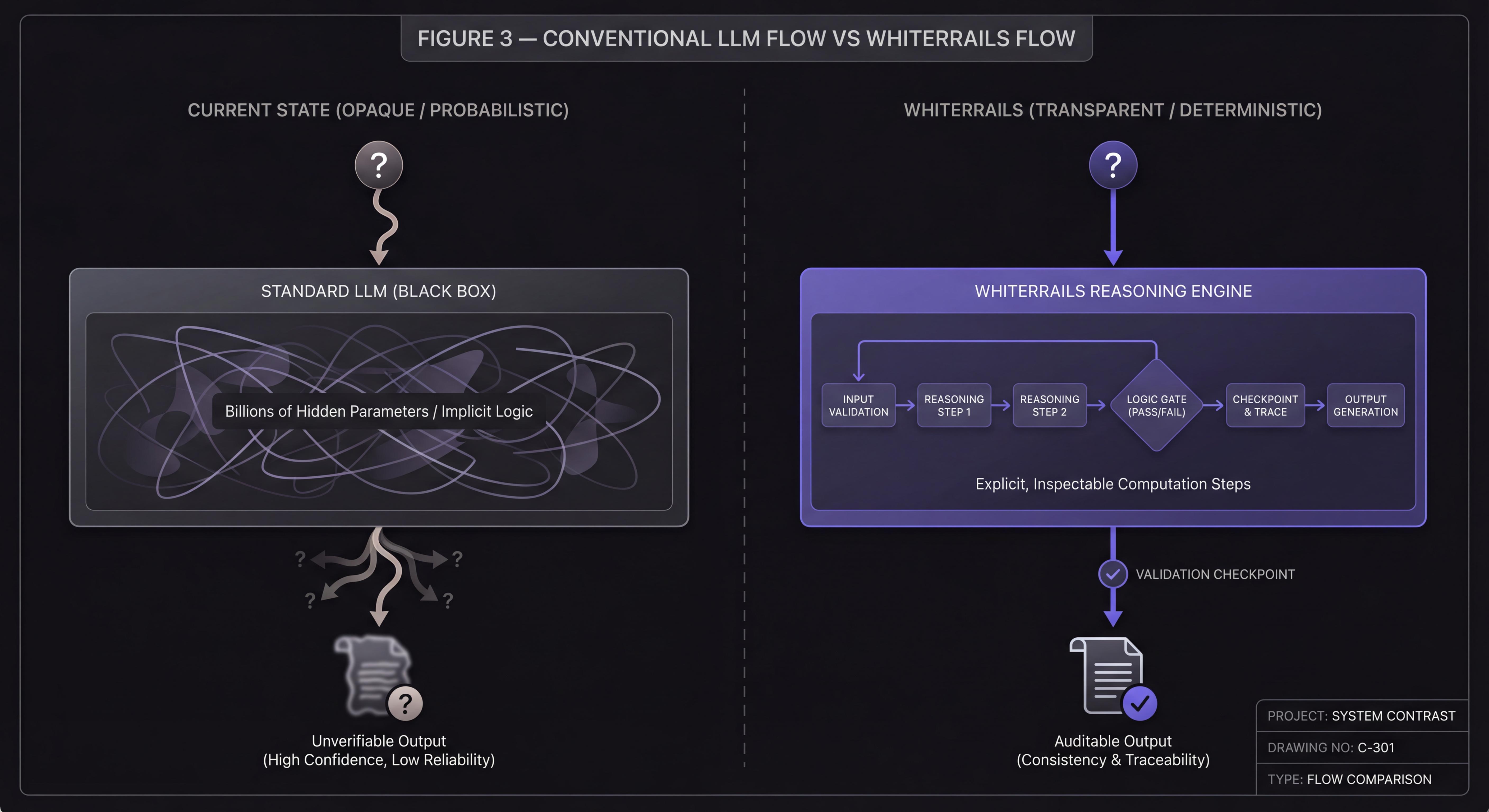

Large Language Models (LLMs) have accelerated software development, knowledge work, and automation. However, their reasoning remains opaque, non-deterministic, and difficult to validate. Enterprises require systems that not only generate answers, but justify them—systems that can explain how decisions were made, reproduce results, and integrate with existing workflows without risking hallucinations or compliance failures.

Whiterails introduces a new approach: a modular reasoning engine that transforms natural language queries into structured, verifiable reasoning workflows. Rather than replacing LLMs, Whiterails complements them—acting as an auditable cognitive layer that interprets user intent, decomposes problems, executes deterministic logic, and integrates external tools or models only when necessary.

1. Introduction

The rapid adoption of generative AI has exposed a critical gap between language fluency and reliable reasoning. Current LLMs excel at producing natural language, but:

- They can generate incorrect output with high confidence.

- They lack traceability and explainability.

- They do not meet enterprise requirements for determinism or auditability.

- They cannot be easily aligned to strict procedural or regulatory constraints.

- Their "reasoning" is implicit—embedded within billions of opaque parameters.

Enterprises do not merely need models that produce answers. They need systems that:

- Explain their steps

- Guarantee consistency

- Integrate with external systems

- Recover from uncertainty

- Expose a transparent chain of logic

Unlike conventional agent frameworks that simply orchestrate LLM calls, Whiterails enforces explicit structural and semantic constraints over every reasoning plan. Each plan must pass verification before execution, ensuring that no model can silently alter intent, reorder steps, or drift from required domain semantics.

Whiterails was founded to close this gap.

2. The Whiterails Vision

Whiterails is building the Reasoning Layer for Enterprise AI.

Our goal is not to replace existing AI models, but to structure, control, and validate how they are used. We provide an execution environment where:

- Natural language intent is transformed into structured reasoning plans.

- These plans are executed deterministically by a reasoning engine.

- LLMs are invoked selectively as modules, not as the system of record.

- Every step is observable, testable, and reproducible.

Instead of relying on emergent behavior inside a model, Whiterails exposes reasoning as explicit, inspectable computation.

3. Design Principles

Whiterails follows five core principles:

3.1. Determinism

Enterprise workflows require guarantees. Whiterails ensures that the same input produces the same reasoning trace and output.

3.2. Transparency

The system exposes an internal representation of reasoning that can be logged, audited, versioned, and verified.

3.3. Modularity

LLMs, external APIs, internal tools, and symbolic logic can all be orchestrated within the same reasoning pipeline.

3.4. Fail-safe Reasoning

If a step is uncertain or incomplete, the system identifies missing information and resolves it via retrieval, tool invocation, or user clarification.

3.5. Vendor Independence

Whiterails works with any LLM provider (OpenAI, Anthropic, Google, on-prem models). Enterprises retain full control over sensitive data.

4. Architecture Overview

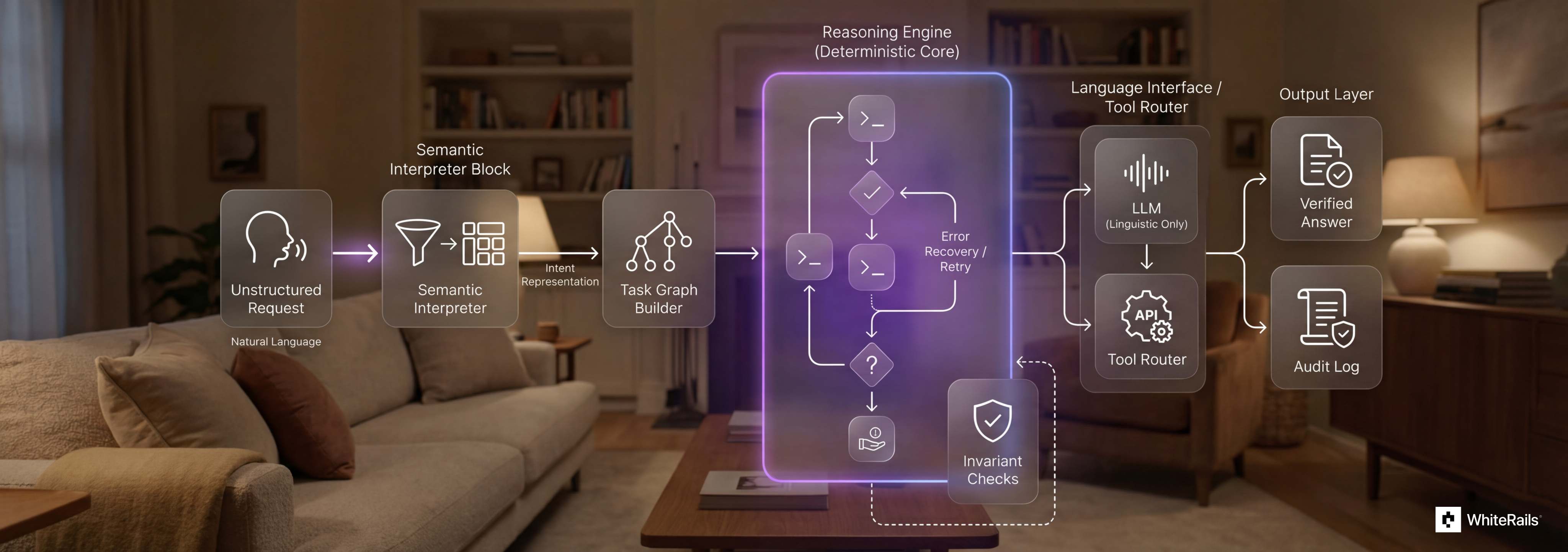

At its core, Whiterails consists of three cooperating components:

4.1. Semantic Interpreter

Intent → Structure

The interpreter converts natural language into a structured internal representation, including:

- • entities

- • operations

- • goals

- • constraints

- • dependencies

4.2. Reasoning Engine

Structure → Deterministic Execution

The engine executes structured reasoning plans step by step. It provides:

- • deterministic evaluation

- • step-level visibility

- • error handling and recovery

- • integration with external tools

- • conditions, loops, branches

- • state management

4.3. Language Interface

Structure → Natural Response

Whiterails converts structured reasoning traces back into natural language, enabling seamless collaboration with users while preserving exactness. LLMs are used here strictly as linguistic modules, not as decision engines.

4.4. Verified Execution Layer

Plan → Verified Execution

While many AI systems accept model-generated reasoning as-is, Whiterails treats every reasoning plan as a candidate that must be verified before execution.

Each plan passes through a Verified Execution Layer—informally known as the Kill Gate—which checks the plan against explicit structural and domain-level constraints. These checks ensure:

- • intent stability

- • preservation of step ordering

- • absence of silent insertions or deletions

- • domain semantics remain consistent

- • execution proceeds only if the plan is complete and coherent

If any constraint is violated, Whiterails operates in a fail-closed manner: the plan is rejected, and the system either requests clarification or regenerates a constrained plan.

This mechanism provides enterprises with predictable, auditable, and tamper-resistant reasoning flows.

4.5. Speculative Validation Architecture (SVA)

Constraint → Generation-Time Enforcement

Beyond verifying completed plans, Whiterails constrains model behavior during generation via its Speculative Validation Architecture (SVA).

SVA selectively restricts the model's output space based on domain-specific rules, ensuring that structurally invalid or irrelevant reasoning paths cannot be produced. Instead of correcting errors post-hoc, SVA prevents them at the token-generation stage.

SVA functions as a lightweight validation layer that:

- • identifies applicable constraints for each request

- • loads domain-appropriate rule sets

- • applies non-intrusive restrictions during reasoning generation

- • monitors performance and the integrity of constraint enforcement

This preserves determinism and reduces semantic drift even when interacting with non-deterministic language models.

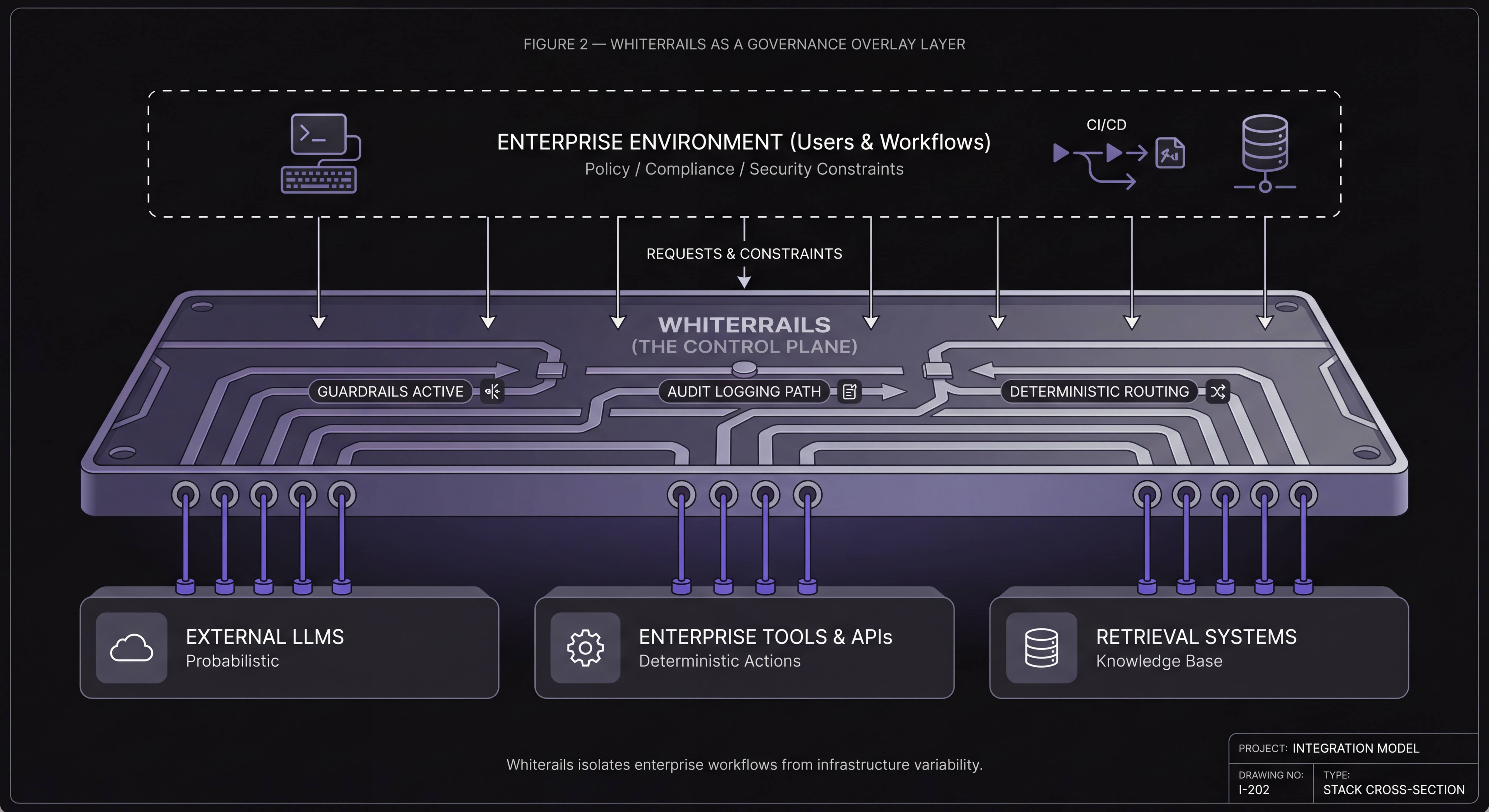

5. Integration Model

Whiterails is designed as an overlay on top of existing infrastructure.

Supported integrations:

- LLMs (OpenAI, Anthropic, Google, self-hosted models)

- Developer tools and IDEs

- Internal enterprise APIs

- Knowledge bases

- Retrieval systems

- Workflow engines

Because reasoning plans are explicit, enterprises can enforce policy controls, compliance constraints, audit trails, and deterministic re-execution without modifying their existing AI stack.

6. Use Cases

Whiterails unlocks reliable automation across multiple domains:

Software Engineering

- • Code explanation

- • Debugging

- • Step-by-step algorithmic reasoning

- • Safe code generation with traceability

- • CI/CD pipeline integration

Financial Services

- • Deterministic risk calculations

- • Policy-compliant reasoning

- • Auditable decision traces

Legal and Compliance

- • Structured document reasoning

- • Verifiable logic chains

- • Regulatory alignment

Enterprise Automation

- • Workflow orchestration

- • Tool-based problem solving

- • Decision augmentation

7. Why Enterprises Need a Reasoning Layer

LLMs alone cannot satisfy enterprise requirements because:

- their outputs cannot be reproduced deterministically

- debugging is difficult due to lack of traceability

- hallucinations pose safety risks

- models cannot express their reasoning steps reliably

- every vendor model behaves differently, making governance hard

Whiterails solves this by:

- Isolating reasoning from language

- Exposing transparent steps

- Enforcing deterministic execution

- Enabling flexible integration

- Providing a single control layer regardless of model vendor

7.1. Verified Reasoning vs Traditional Orchestration

Conventional agent frameworks rely on emergent behavior and probabilistic reasoning. They can compose tool calls, but they cannot guarantee that the reasoning sequence is complete, consistent, reproducible, or free of silent structural changes.

Whiterails introduces verified reasoning: a workflow in which explicit representations of intent, entities, and steps are validated before they can influence downstream systems.

This transforms AI from a best-effort process into a governed, deterministic layer suitable for enterprise-critical environments.

8. Security and Governance

Whiterails adheres to strict enterprise-grade security guidelines:

- On-premise deployment available

- Full control over data flows

- Deterministic reasoning traces for audit

- No retention of customer data

- Optional encrypted logging

We design for environments where confidentiality, explainability, and governance are non-negotiable.

9. Roadmap (Public Edition)

The Whiterails system is evolving toward three milestones:

Phase 1 — Reasoning Engine for Software Development

Available

- • Structured interpretation of programming queries

- • Deterministic execution

- • LLM-agnostic integration

- • Enterprise connectors

Phase 2 - Multi-Domain Reasoning Framework

In Development

- • Generalizable task representation

- • Domain-specific operator libraries

- • Enterprise workflow automation

Phase 3 — Adaptive Reasoning Models

Research

- • Compact reasoning-focused models

- • Dynamic integration of external knowledge modules

- • Enhanced determinism and self-correction

(Technical details of future research withheld in this public edition.)

10. Conclusion

As enterprises adopt AI at scale, the limitations of probabilistic, opaque reasoning become increasingly clear.

Whiterails provides a new foundation: a transparent, deterministic, modular reasoning layer that complements—rather than replaces—existing LLMs.

The result is AI that is:

- more reliable

- more auditable

- more controllable

- more aligned with enterprise requirements

Whiterails enables organizations to deploy AI systems with the confidence, predictability, and governance they expect from mission-critical software.

By separating reasoning from language and enforcing formal verification at each stage, Whiterails provides not just visible reasoning, but verified reasoning — an essential foundation for mission-critical enterprise AI.